Human Intelligence: A digital anthropologist on the 'Wild West' of AI

MaaxaLabs on the Culture of AI

Human Intelligence is an occasional series to help Portland Business Journal readers better understand how they might use AI to improve their businesses. We'll highlight takeaways and best practices shared by people who are adding AI to their products or who study the technology.

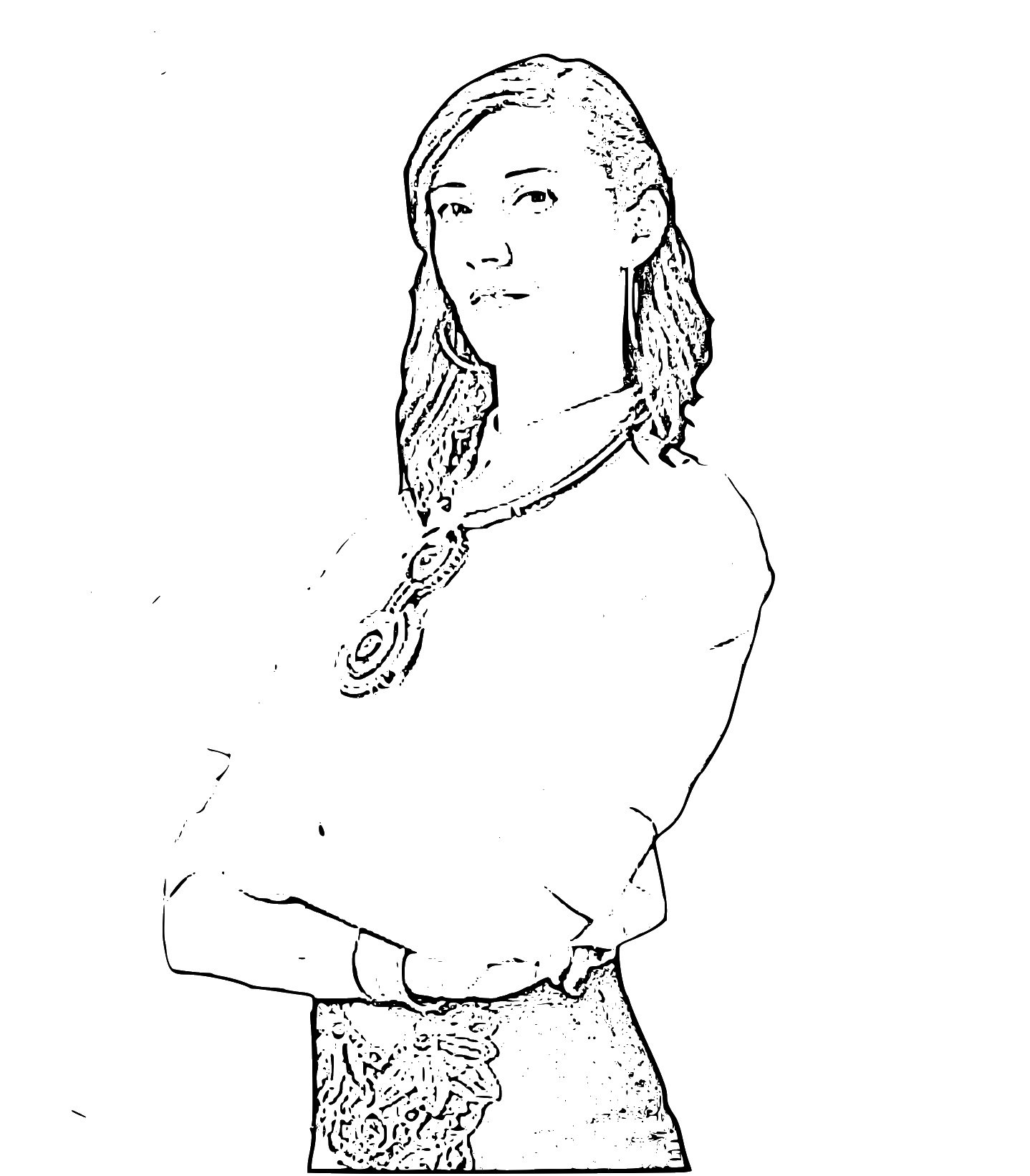

Ali Maaxa is a digital anthropologist and founder of Maaxa Labs

By Malia Spencer - Portland Inno

July 24, 2023, 11:40am PDT

Human Intelligence is an occasional series to help PBJ readers better understand how they might use AI to improve their businesses. We'll highlight takeaways and best practices shared by people who are adding AI to their products or who study the technology.

Ali Maaxa is a consultant whose work helps organizations understand and use AI tools that have evolved from prediction and machine learning into generative AI.

By training she is an anthropologist with degrees in media studies and folklore, though she has spent the last six years working in-house for software-as-a-service companies using AI. Her recently launched consulting firm, Maaxa Labs, works on research and strategy surrounding AI, user experience and human aspects of technology. She is also a founding member of the AI Governance Group, a collective of Portland professionals working to help safely understand and leverage commercial AI tools.

I caught up with Maaxa in the latest edition of Human Intelligence. This interview has been edited for length and clarity.

How does anthropology fit into working in AI?

On one hand, it’s those traditional questions of what does it mean to be human? And where does the human end and the tool begin? Or where does the individual human end and the social begin? So those are questions that anthropologists and archaeologists have always been asking in the digital realm. I ask how do new digital tools replace or change the tools that we’ve always been using. Whether it's using an amplifier for our voices or a talking drum to spread a message even further than we could with our own human voice. How do tools like the internet expand that or how do they draw from those previous traditions? So for me, I ask questions of how does artificial intelligence increase our capacity to connect to each other or to other resources? What traditional modes of communication does it replace and what does it change in that? There are those individual what-does-it-mean-to-be-human questions and then there are another series of questions that say what do global systems look like for humans, how does the culture of cloud (computing) compare to previous global systems?

Studying the human experience, do you see other moments in history that are analogous to where we are right now in the broader awareness of AI technology?

The example I always use is from (philosopher and media theorist) Marshall McLuhan’s work. Let's look at the history of media. How have we produced machines that mediate our relationships with each other and the resources we need and the ecology around us? I think a really great example of this is the printing press, which led to the Gutenberg Bible. And the Gutenberg Bible led to the wholesale widespread, global spread of Christianity in book form and inspired and guided and sometimes constrained globalization in a way that very much affected modernity. There we have a transposition of a religion, particularly North Atlantic Christianity, that was really present in one part of the world and this medium transposed it into something that became a global system. So we look back and go, OK, what did that replace? It replaced a lot of oral traditions. What did it invent or make possible that wasn’t possible before access to those original texts so people all over the world remotely communicated? And what are the politics around it? What does it enable or constrain? On the one hand it enabled a global imagination. On the other hand, it can strain the ways that we could imagine relating globally to something that was dominated by this one system. So when I work with my clients to understand what AI does in their organizations I ask those questions. What does this make possible? What resources does this free up for you? What does it constrain? What does it lead you to not question?

There is a lot of hype right now around generative AI. What do you think about the current state of AI technology?

I think it is the Wild West. Except this Wild West needs to be peopled with those of us who have not conventionally been empowered to guide these conversations. There are a lot of highly technical ways of understanding the world. Understanding from the standpoint of someone who is in a ZIP code that is marginalized by lenders because of AI, for instance, is also a highly technical way of knowing. And so I would like to see more representatives of these communities sitting on AI panels right now. This Wild West ideally will have a lot more women and queer-identified people, again, who are understanding how changes in technology affect populations who often don’t have a voice in these conversations. We’re talking about AI at large and there tends to be a moment toward the beginning of (a panel or presentation) where we sort of raise consciousness around what AI actually is. I think we’re at the point in the discourse where people are seeing articles on LinkedIn or Facebook or CNN that are asking them to understand the whole of AI through the lens of a generative tool that they may have tried out only recently. I tend to say AI is the newest iteration of a global technological system that uses statistics, in particular statistical representations of humans and the resources that we use, to predict where we’re going. The thing is, it relies on statistical representations and those statistical ways of knowing are however the humans who developed the AI think those statistical representations should be pieced together. Now we see all the end points that humans can influence and also points of failure in the system. That's the fear factor (in all of this), when do we have agency in this? Statistics and numbers are only one way of understanding experience and ecology and understanding the world.